The 2012

NeSA Technical Report contains the information needed to complete (and make

corrections on) the Grade 3 Reading Performance chart. The reported portion

passing was 76% for Grade 3

(Nebraska Accountability/NeSA Reading/Grade 3).

The observed average score reported was 70%. The estimated

expected score was about 66% [No calibration values were given for 10 fairly

easy items that were at the beginning of the 2012 test].

The students did better in 2012 on a test that may have been

more difficult than in 2011. [The lack of the calibration data on 10 easier

items is critical to verifying what happened.]

[All students were

presented with all 45 questions. Although the test was taken online, it was not

a computer adapted test (CAT). The test design item difficulty was 65%, which is

15% above CAT design (50%).

“Experience suggests

that multiple choice items are effective when the student is more likely to

succeed than fail and it is important to include a range of difficulties

matching the distribution of student abilities (Wright & Stone, 1979).” (2012

NeSA Technical Report, page 31)

The act of

measuring should not alter the measurement. The Nebraska test seems to be a

good compromise between what psychometricians want to optimize their

calculations and what students are accustomed to in the classroom. CAT at 50%

difficulty is not a good fit.]

Fifteen common (core) items were used in all three years:

2010, 2011, and 2012. They are remarkably stable. It testifies to the skill of

the test creators to write, calibrate, and select items that present a uniform

challenge over the three years.

It also shows that little has changed in the entire

educational system (teach, learn, assess) with respect to these items. [Individual

classroom successes are hidden in a massive collection of several thousand test

results.]

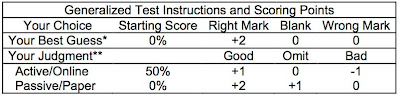

My challenge to Nebraska to include student judgment on

standardized tests resulted in about the same number of hits on this blog as the

letters mailed. No other contact occurred.

This means that standardized testing will continue counting

right marks that may have very different meanings. At the lowest levels of

thinking, good luck on test day will be an important contributing factor for passive

pupils to pass a test where passing requires a score of 58% on a scale with a

mean at 70%.

Students able to function at higher levels of thinking but

with limited opportunity to prepare for the test will not be able to

demonstrate the quality of what they do know or can do. Both groups will be

ranked by right marks that have very different meanings.

The improvement in reading seen in the lower Nebraska grades (Nebraska

Accountability/NeSA Reading) failed to carry over into the higher grades.

Effective teachers can deliver better prepared students functioning at lower

levels of thinking at the lower grades. [Student quality becomes essential at

higher levels of thinking in the higher grades.]

Typically the rate of increase in test scores decreases with

each year (average Nebraska Grade 3 scores of 65%, 68% and 69% on the 15 common

items) where classrooms and assessments function at lower levels of thinking.

Students and teachers need to break out of this short-term-success trap.

[And state education officials need to avoid the temptation many

took in the past decade of NCLB testing to produce results that looked right.

It is this troubled past that makes the missing expected item difficulty values

for 10 of the easier 2012 test items so critical.]

The Common Core State Standards movement is planning to

avoid the short-term-success trap. Students are to be taught to be

self-correcting: question, answers, and verify. Students are to be rewarded for

what they know and can do and for their judgment in using that knowledge and

skill.

Over the long term students are to develop the habits needed

to be self-empowering and self-assessing. These habits function over the long

term, in school and in the workplace. They provide the quality that is ignored

with traditional right count multiple-choice tests. In school, if you do not

reward it, it does not count.

The partial

credit Rasch model and Knowledge and

Judgment Scoring allow students to elect to report what they trust they

know and can do as the basis for further instruction and learning. Quantity and

quality are both assessed and rewarded.

Nebraska can still create a five star standardized test.

Seasons Greetings and a Happy New Year!